Advanced Configuration: Dealing with large data set (LDV)

Got millions of records in your Salesforce Org? You’ll need to chunk millions of records into smaller data-set.

Example: There are 20 million contacts. It could take tens of hours to mask them, the masking operation can also encounter SOQL time-outs. The optimal approach is to slice them into five 4 million data sets. This way all of these five data sets will process in parallel. This also prevents any SOQL time-out exceptions.

DataMasker’ Filter Criteria’ can be used to slice large data sets into smaller data sets. Filter Criteria contains the where clause of a SOQL.

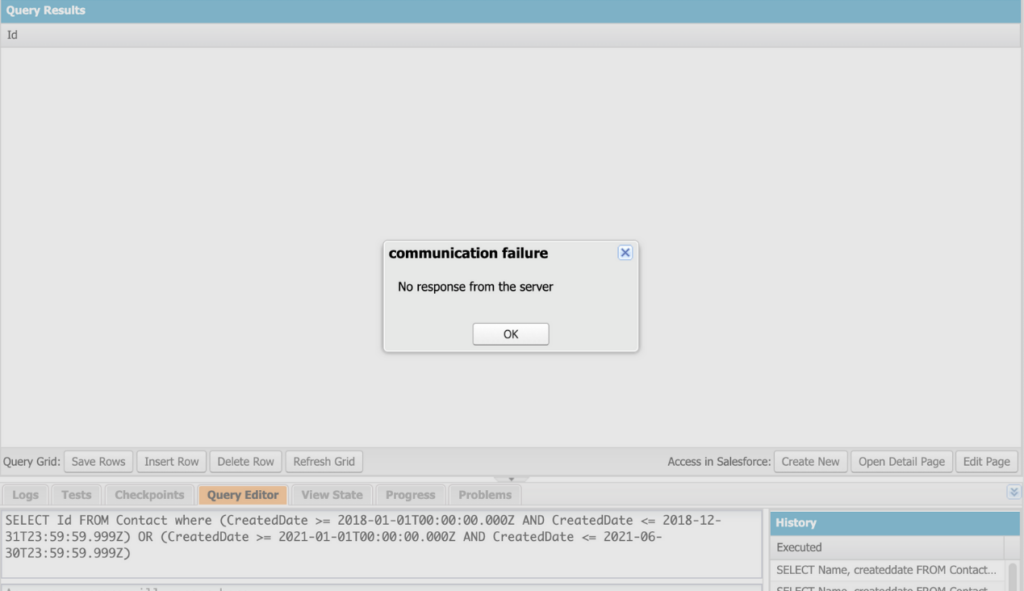

The challenge with utilizing the ‘where’ clause in this situation is that when you attempt to perform the SOQL operation on a chunk of records, it can time out. This makes it difficult to guess the right number of records to mask.

Method 1: Use Postman

This is where Postman comes in. Postman is an API platform for building and using APIs. It simplifies each step of the API lifecycle and streamlines collaboration, helping you create better APIs faster.

Below are the steps on how to utilize Postman

Step 1: Refer article to download Postman and connect to your Salesforce Org

Step 2: Once the connection is made, you will have the ability to submit simple query batch jobs using Bulk API.

This will make it easier for you to get the count, as it will not time out. For this, refer to the following article, which is linked here.

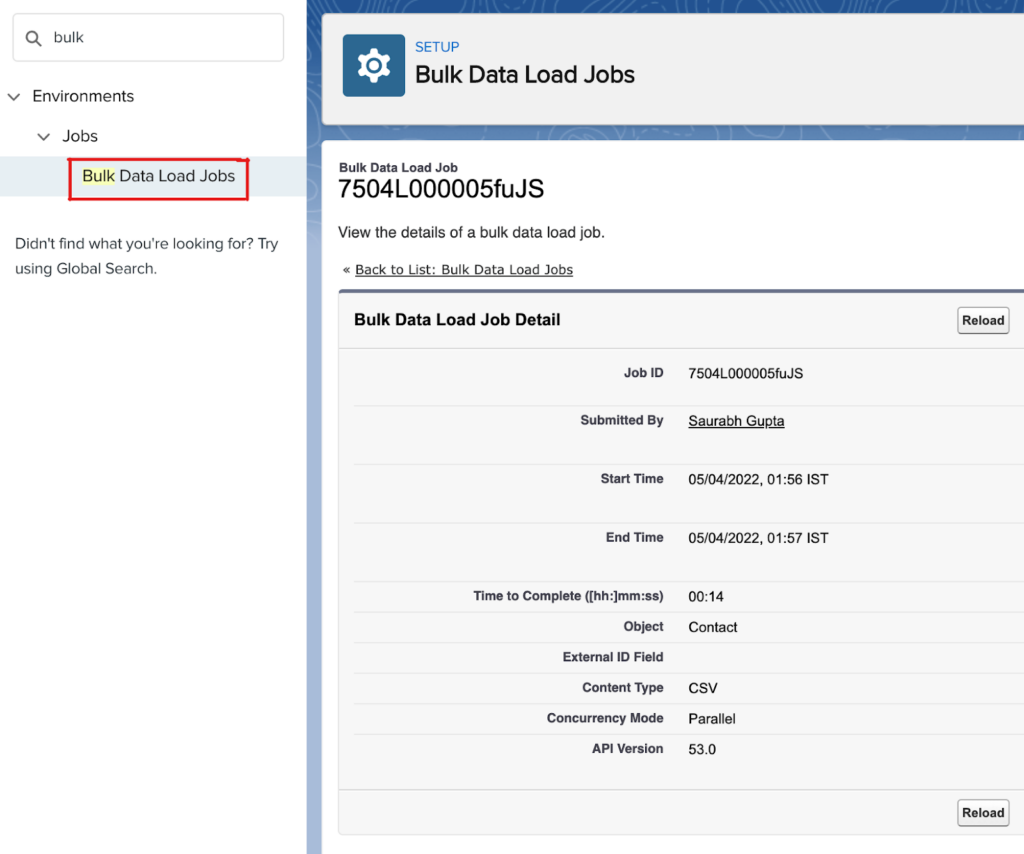

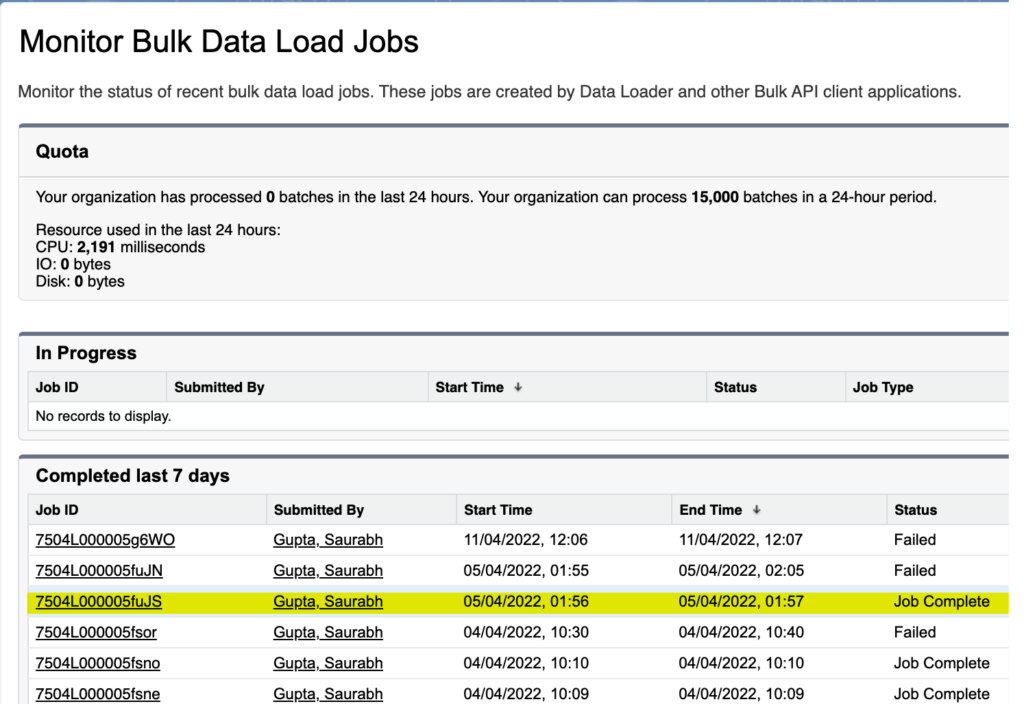

Step 3: Once a job is submitted, go to ‘Navigate’ -> ‘Salesforce Dashboard Setup’ -> ‘Bulk Data Load Jobs.’

Now, you can see the status of the job and prevent your masking from being impacted by time-outs.

Method 2: Use Workbench

This makes it difficult for you to guess the right number of records to mask. Here Workbench comes to the rescue.

Workbench is an API platform for building and using APIs. It simplifies each step of the API lifecycle and streamlines collaboration, helping you create better APIs—faster.

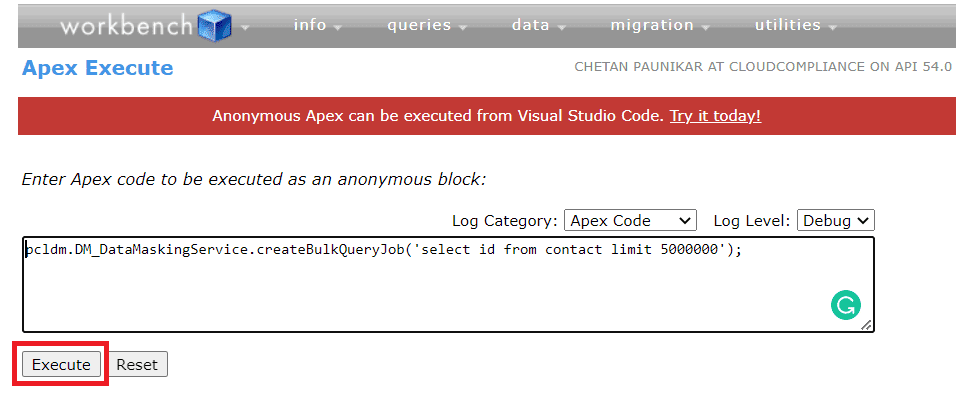

Enable the ability to execute Bulk API queries through an exposed DataMasker API

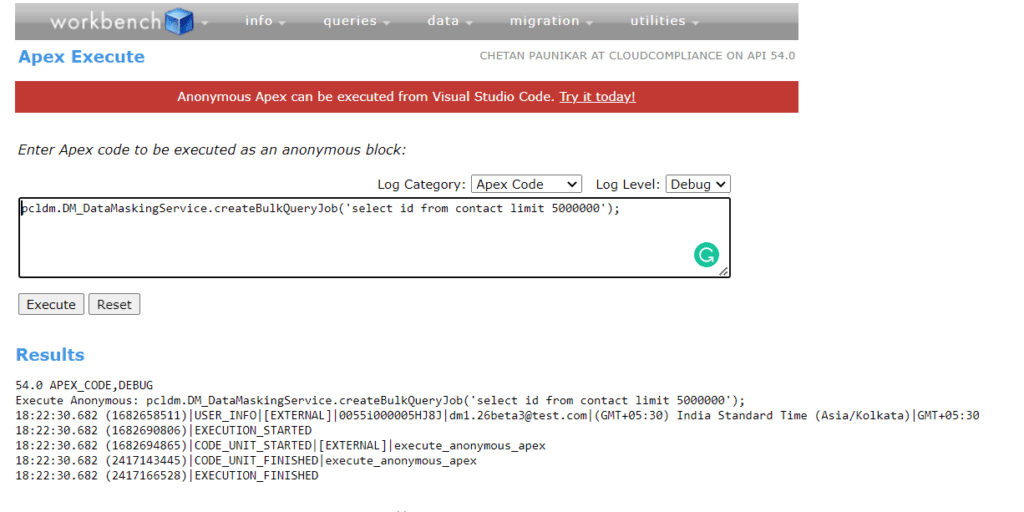

pcldm.DM_DataMaskingService.createBulkQueryJob(‘____’);

For e.g.,

pcldm.DM_DataMaskingService.createBulkQueryJob(‘select id from Contact limit 3000000’);

Goto workbench, log in with your salesforce org, and click on ‘Apex Execute.’

Click on ‘Execute’.

After Execute the query it will give the result

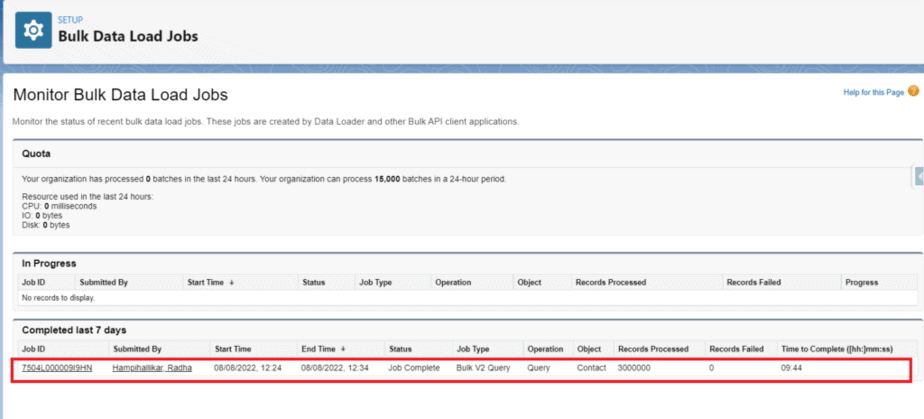

The User has to check in Bulk Data Loads Jobs.

Method 3: DataMasker API

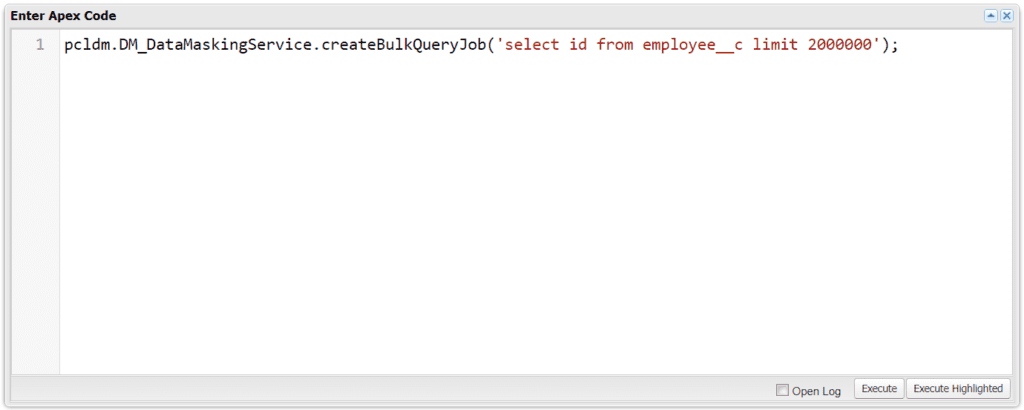

Enable the ability to execute Bulk API queries through an exposed DataMasker API via the Developer Console

pcldm.DM_DataMaskingService.createBulkQueryJob(‘select id from employee__c limit 2000000’);

Click on ‘Execute’.

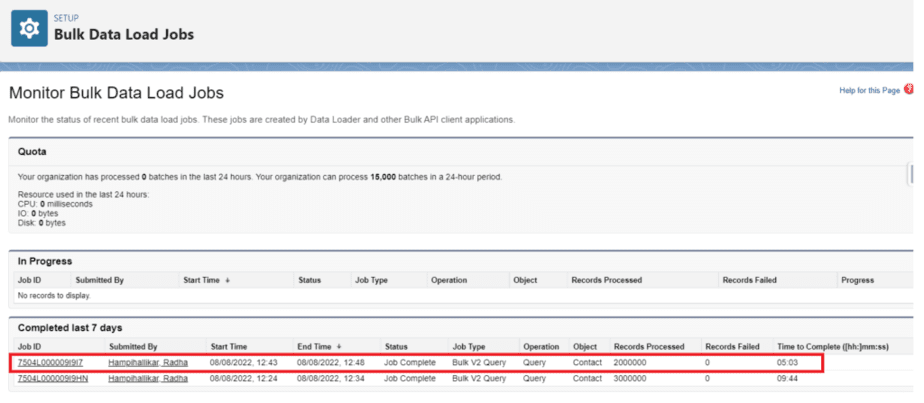

The User has to check in Bulk Data Loads Jobs.

Need Help?

If you have questions about this documentation, please contact our support team.